Tabby

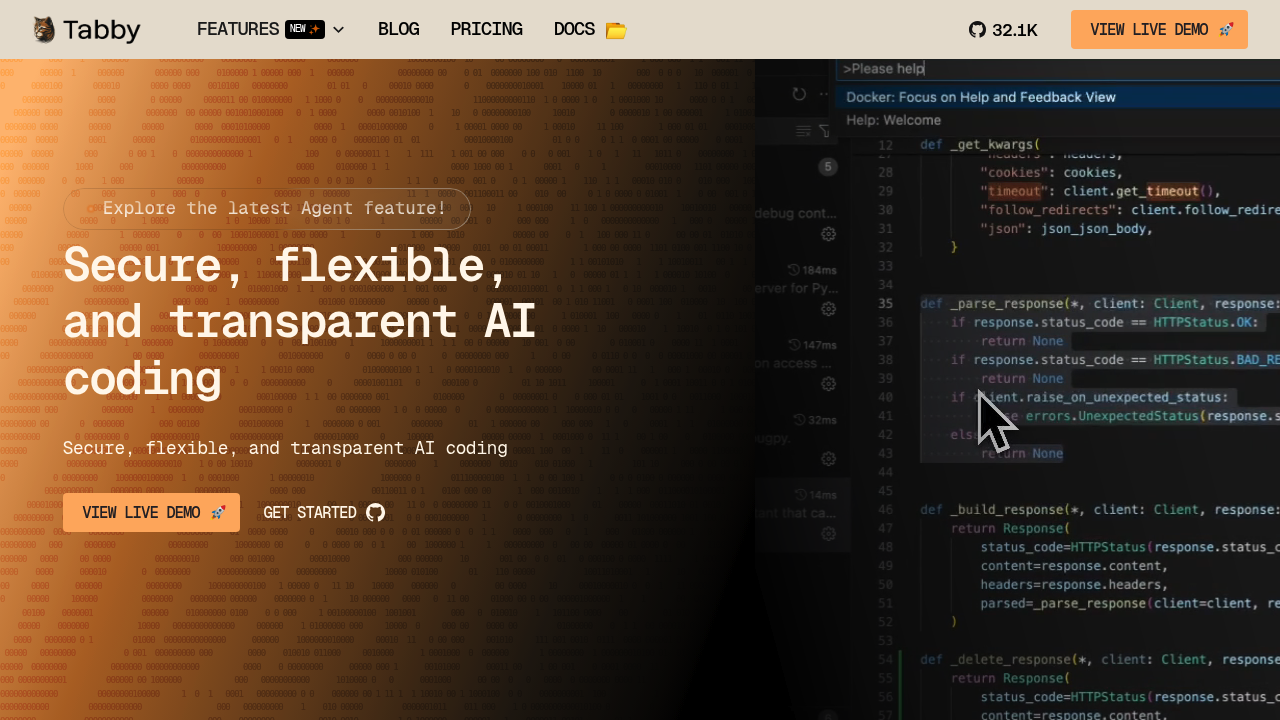

Tabby - Github Copilot alternative

Tabby is a self-hosted AI coding assistant that provides an open-source alternative to GitHub Copilot. Teams can set up their own LLM-powered code completion server with ease. The system is self-contained and requires no external database or cloud service. Solo developers and teams who prioritize data sovereignty and infrastructure control might prefer it over cloud-based solutions.

Strengths

- Self-contained architecture eliminates dependencies on external databases or mandatory cloud services

- Compatible with major coding LLMs including CodeLlama, StarCoder, and CodeGen without custom implementation

- Supports consumer-grade GPUs, making deployment accessible without enterprise hardware

- Optimizes the entire stack from IDE extensions to model serving with adaptive caching for sub-second completions

- OpenAPI interface enables integration with existing infrastructure including Cloud IDEs

- Includes Answer Engine for instant coding questions, inline chat, and data connectors for project context

Weaknesses

- Requires infrastructure setup and maintenance compared to managed solutions

- Recommended hardware ranges from NVIDIA T4/10/20 series for 1B-3B models to V100/A100/30/40 series for 7B-13B models

- Limited enterprise support options compared to commercial products

- Completion quality depends on chosen model size and hardware capabilities

- Self-hosting adds operational overhead for updates and monitoring

Best for

- Teams requiring on-premises deployment for compliance or security policies

- Organizations with existing GPU infrastructure

- Solo developers comfortable with self-hosted tools who want data privacy

Pricing plans

- Open Source — Free — Up to 50 users, self-hosted deployment

- Tabby Cloud — Usage-based pricing — Charged based on LLM token consumption with $20 monthly free credits, automatic billing above $10 or at month-end

- Tab Completion — Free — Always free with no usage limits (credit card may be requested for unusually high usage to prevent abuse)

- Team/Enterprise — Custom, billed annually — Enhanced security support, flexible deployment options

Tech details

- Type: Self-hosted AI code completion and chat assistant

- IDEs: Visual Studio Code, VSCodium, all IntelliJ Platform IDEs (IDEA, PyCharm, GoLand, Android Studio) with build 2023.1 or later, Vim

- Key features: Multi-line code completion, full function suggestions, Answer Engine for coding questions, inline chat, data connectors for project context, GitLab Merge Request indexing, repository-level context, LDAP authentication

- Privacy / hosting: Self-hosted on-premises or private cloud. No data sent to external services. Complete data control and retention policies managed by the deploying organization.

- Models / context window: Completion models include StarCoder (1B-15B), CodeLlama, CodeGen, CodeGemma, CodeQwen series. Chat models require minimum 1B parameters (Qwen2-1.5B-Instruct recommended). Context window varies by model (typically 2K-16K tokens depending on model choice).

When to choose this over Github Copilot

- Your organization requires on-premises deployment for compliance, security, or data sovereignty requirements

- You want full control over model selection and infrastructure without vendor lock-in

- You have consumer-grade GPUs available and want to minimize cloud costs

When Github Copilot may be a better fit

- You prefer zero-infrastructure maintenance with a fully managed cloud service

- Your team lacks GPU resources or DevOps capacity for self-hosted deployments

- You need enterprise-grade support with guaranteed SLAs and minimal setup time

Conclusion

Tabby delivers an open-source, self-hosted Github Copilot alternative for teams prioritizing data control and infrastructure flexibility. Its self-contained architecture and consumer-GPU support lower the barrier to private AI coding assistance. Organizations comfortable with self-hosting gain customizable model selection and complete data sovereignty. The trade-off is operational responsibility that managed services eliminate.

Sources

- Official site: https://www.tabbyml.com/

- Docs: https://tabby.tabbyml.com/docs/welcome/

- Pricing: https://www.tabbyml.com/pricing

- GitHub: https://github.com/TabbyML/tabby

FAQ

Is Tabby completely free to use?

The open-source version is free for up to 50 users with self-hosted deployment. Tab completion remains free with no usage limits. Cloud-based usage follows a pay-as-you-go model with $20 in monthly free credits.

What hardware do I need to run Tabby?

For 1B-3B parameter models, you need at least NVIDIA T4, 10 Series, 20 Series GPUs, or Apple Silicon like M1. For 7B-13B models, NVIDIA V100, A100, 30 Series, or 40 Series GPUs are recommended.

Which IDEs does Tabby support?

Tabby supports Visual Studio Code, VSCodium, all IntelliJ Platform IDEs including IDEA, PyCharm, GoLand, and Android Studio (build 2023.1+), plus Vim.

Can I use my own AI models with Tabby?

Yes, Tabby is compatible with major coding LLMs like CodeLlama, StarCoder, and CodeGen. You can use and combine preferred models without custom implementation.

Does Tabby send my code to external servers?

No. Tabby is self-contained with no need for external cloud services. All processing occurs on your infrastructure, giving you complete control over data retention and privacy policies.

How does Tabby compare in code completion speed?

Tabby optimizes the entire stack with adaptive caching strategy to ensure rapid completion in less than a second. Performance depends on your hardware and chosen model size.